Preface

微服务架构下, 微服务在带来良好的设计和架构理念的同时, 也带来了运维上的额外复杂性, 尤其是在服务部署和服务监控上. 单体应用是集中式的, 就一个单体跑在一起, 部署和管理的时候非常简单, 而微服务是一个网状分布的, 有很多服务需要维护和管理, 对它进行部署和维护的时候则比较复杂. 集成Docker之后, 我们可以很方便地部署以及编排服务, ELK的集中式日志管理可以让我们很方便地聚合Docker日志.

Log4j2 Related

使用Log4j2

下面是 Log4j2 官方性能测试结果:

Maven配置

1 | <!-- Spring Boot 依赖--> |

注意:

- 需要单独把

spring-boot-starter里面的logging去除再引入spring-boot-starter-web, 否则后面引入的starter模块带有的logging不会自动去除 Disruptor需要3.3.8以及以上版本

开启全局异步以及Disruptor参数设置

官方说明: https://logging.apache.org/log4j/2.x/manual/async.html#AllAsync

添加Disruptor依赖后只需要添加启动参数:

1 | -Dlog4j2.contextSelector=org.apache.logging.log4j.core.async.AsyncLoggerContextSelector |

也可以在程序启动时添加系统参数.

若想知道Disruptor是否生效, 可以在

AsyncLogger#logMessage中断点

加大队列参数:

1 | -DAsyncLogger.RingBufferSize=262144 |

设置队列满了时的处理策略: 丢弃, 否则默认blocking, 异步就与同步无异了:

1 | -Dlog4j2.AsyncQueueFullPolicy=Discard |

系统时钟参数

通过 log4j2.clock 指定, 默认使用 SystemClock, 我们可以使用 org.apache.logging.log4j.core.util.CachedClock. 其他选项看接口实现类, 也可以自己实现 Clock 接口.

Log4j 环境变量配置文件

上面的全局异步以及系统始终参数配置都是通过系统环境变量来设置的, 下面方式可以通过配置文件的方式来设置.

在 resource 下定义 log4j2.component.properties 配置文件:

1 | Log4jContextSelector=org.apache.logging.log4j.core.async.AsyncLoggerContextSelector |

application.yml简单配置

1 | logging: |

log4j2.xml 详细配置

先来看一下常用的输出格式:

%d{yyyy-MM-dd HH:mm:ss.SSS}: 输出时间,精确度为毫秒.%-5level|%-5p: 输出日志级别, -5表示左对齐并且固定占5个字符宽度, 如果不足用空格补齐.%t|%thread: 线程名称%c{precision}|logger{precision}: 输出的Logger名字,{precision}表示保留的名字长度, 比如%c{1}是这样的Foo,%c{3}是这样的apache.commons.Foo%C{precision}|%class{precision}: 实际上输出log的类名, 如果一个类有子类(Son extend Father), 在Father中调用log.info, 那么%C{1}输出的是Father.M|method: 输出log所在的方法名.L|line: 输出log所在的行数.%msg{nolookups}: 输出的log日志,{nolookups}表示忽略掉一些内置函数比如logger.info("Try ${date:YYYY-MM-dd}"), 如果不加{nolookups}那么输出的日志会是这样的Try 2019-05-28.%n: 换行, 一般跟在%msg后面.%xEx|%xwEx: 输出异常, 后者会在异常信息的开始与结束append空的一行, 与%ex的区别在于在每一行异常信息后面会追加jar包的信息.%clr: 配置颜色, 比如%clr{字段}{颜色}blue: 蓝色cyan: 青色faint: 不知道什么颜色, 输出来是黑色green: 绿色magenta: 粉色red: 红色yellow: 黄色

所以我们的pattern是这样的: %d{yyyy-MM-dd HH:mm:ss.SSS} | %-5level | ${server_name} | %X{IP} | %logger{1} | %thread -> %class{1}#%method:%line | %msg{nolookups}%n%xwEx.

使用 | 作为分隔符是因为后面输出到Logstash时用于字段分割.

输出到 Kafka

1 |

|

bootstrap.servers是kafka的地址, 接入Docker network之后可以配置成kafka:9092topic要与Logstash中配置的一致- 启用了全局异步需要将

includeLocation设为true才能打印路径之类的信息 - Kafka地址通过

${spring:ybd.kafka.bootstrap}读取配置文件获取, 这个需要自己拓展Log4j, 具体请看下面的获取Application配置 LOG_PATTERN中的%X{IP}、%X{UA}, 通过MDC.put(key, value)放进去, 同时在<Root>中设置includeLocation="true"才能获取%t、%c等信息KafkaAppender结合FailoverAppender确保当Kafka Crash时, 日志触发Failover, 写到文件中, 不阻塞程序, 进而保证了吞吐.retryIntervalSeconds的默认值是1分钟, 是通过异常来切换的, 所以可以适量加大间隔.KafkaAppenderignoreExceptions必须设置为false, 否则无法触发FailoverKafkaAppendermax.block.ms默认是1分钟, 当Kafka宕机时, 尝试写Kafka需要1分钟才能返回Exception, 之后才会触发Failover, 当请求量大时, log4j2 队列很快就会打满, 之后写日志就Blocking, 严重影响到主服务响应- 日志的格式采用

" | "作为分割符方便后面Logstash进行切分字段

输出到文件

这种方式可以用于存档, 同是使用 Filebeat 抓取文件日志输出到 Logstash.

1 |

|

也可以使用log4j2.yml

需要引入依赖以识别:

1 | <!-- 加上这个才能辨认到log4j2.yml文件 --> |

log4j2.yml:

1 | Configuration: |

更多配置请参照: http://logging.apache.org/log4j/2.x/manual/layouts.html

日志配置文件中获取Application配置

Logback

方法1: 使用logback-spring.xml, 因为logback.xml加载早于application.properties, 所以如果你在logback.xml使用了变量时, 而恰好这个变量是写在application.properties时, 那么就会获取不到, 只要改成logback-spring.xml就可以解决.

方法2: 使用<springProperty>标签, 例如:

1 | <springProperty scope="context" name="LOG_HOME" source="logback.file"/> |

Log4j2

只能写一个Lookup:

1 | /** |

然后在log4j2.xml中这样使用 ${spring:spring.application.name}

自定义字段

可以利用MDC实现当前线程自定义字段

1 | MDC.put("IP", IpUtil.getIpAddr(request)); |

log4j2.xml中这样获取%X{IP}

Spring Boot Docker Integration

准备工作

- Docker

- IDE(使用IDEA)

- Maven环境

- Docker私有仓库, 可以使用Harbor(Ubuntu中安装Harbor)

集成Docker需要的插件docker-maven-plugin: https://github.com/spotify/docker-maven-plugin

安全认证配置

当我们 push 镜像到 Docker 仓库中时, 不管是共有还是私有, 经常会需要安全认证, 登录完成之后才可以进行操作. 当然, 我们可以通过命令行

docker login -u user_name -p password docker_registry_host登录, 但是对于自动化流程来说, 就不是很方便了. 使用 docker-maven-plugin 插件我们可以很容易实现安全认证.

普通配置

settings.xml:

1 | <server> |

Maven 密码加密配置

settings.xml配置私有库的访问:

首先使用你的私有仓库访问密码生成主密码:

1 | mvn --encrypt-master-password <password> |

其次在settings.xml文件的同级目录创建settings-security.xml文件, 将主密码写入:

1 | <?xml version="1.0" encoding="UTF-8"?> |

最后使用你的私有仓库访问密码生成服务密码, 将生成的密码写入到settings.xml的<services>中(可能会提示目录不存在, 解决方法是创建一个.m2目录并把settings-security.xml复制进去)

1 | mvn --encrypt-password <password> |

1 | <server> |

构建基础镜像

Dockerfile:

1 | FROM frolvlad/alpine-oraclejdk8:slim |

构建:

1 | docker build --build-arg HTTP_PROXY=192.168.6.113:8118 -t yangbingdong/docker-oraclejdk8 . |

其中HTTP_PROXY是http代理, 通过--build-arg参数传入, 注意不能是127.0.0.1或localhost.

开始集成

编写Dockerfile

在src/main下面新建docker文件夹, 并创建Dockerfile:

1 | FROM yangbingdong/docker-oraclejdk8:latest |

- 通过

@@动态获取打包后的项目名(需要插件, 下面会介绍) Dspring.profiles.active=${ACTIVE:-docker}可以通过docker启动命令-e ACTIVE=docker参数修改配置

注意PID

如果需要Java程序监听到sigterm信号, 那么Java程序的PID必须是1, 可以使用ENTRYPOINT exec java -jar ...这种方式实现.

pom文件添加构建Docker镜像的相关插件

继承

spring-boot-starter-parent, 除了docker-maven-plugin, 下面的3个插件都不用填写版本号, 因为parent中已经定义版本号

spring-boot-maven-plugin

这个不用多介绍了, 打包Spring Boot Jar包的

1 | <plugin> |

maven-resources-plugin

resources插件, 使用@变量@形式获取Maven变量到Dockerfile中(同时拷贝构建的Jar包到Dockerfile同一目录中, 这种方式是方便手动构建镜像)

1 | <plugin> |

build-helper-maven-plugin

这个是为了给镜像添加基于时间戳的版本号, maven也有自带的获取时间戳的变量maven.build.timestamp.format + maven.build.timestamp:

1 | <maven.build.timestamp.format>yyyy-MM-dd_HH-mm-ss<maven.build.timestamp.format> |

但是这个时区是UTC, 接近于格林尼治标准时间, 所以出来的时间会比但前的时间慢8个小时.

如果要使用GMT+8, 就需要build-helper-maven-plugin插件, 当然也有其他的实现方式, 这里不做展开.

1 | <build> |

然后可以在pom中使用${timestamp}获取时间戳.

当然, 也可以使用另外一种方式实现, 打包前export一个格式化日期的环境变量, pom.xml中获取这个变量:

export DOCKER_IMAGE_TAGE_DATE=yyyy-MM-dd_HH-mmmvn help:system可查看所有环境变量- 所有的环境变量都可以用以

env.开头的Maven属性引用:${env.DOCKER_IMAGE_TAGE_DATE}

docker-maven-plugin

这也是集成并构建Docker镜像的关键

1 | <plugin> |

主要properties:

1 | <properties> |

说明:

- 这里的

serverId要与mavensetting.xml里面的一样

- Dockerfile构建文件在

src/main/docker中 - 如果Dockerfile文件需要maven构建参数(比如需要构建后的打包文件名等), 则使用

@@占位符(如`@project.build.finalName@)原因是Sping Boot 的pom将resource插件的占位符由${}改为@@, 非继承Spring Boot 的pom文件, 则使用${}`占位符 - 如果不需要动态生成Dockerfile文件, 则可以将Dockerfile资源拷贝部分放入

docker-maven-plugin插件的<resources>配置里 spring-boot-maven-plugin插件一定要在其他构建插件之上, 否则打包文件会有问题.

docker-maven-plugin 插件还提供了很多很实用的配置, 稍微列举几个参数吧.

| 参数 | 说明 | 默认值 |

|---|---|---|

<forceTags>true</forceTags> |

build 时强制覆盖 tag, 配合 imageTags 使用 | false |

<noCache>true</noCache> |

build 时, 指定 –no-cache 不使用缓存 | false |

<pullOnBuild>true</pullOnBuild> |

build 时, 指定 –pull=true 每次都重新拉取基础镜像 | false |

<pushImage>true</pushImage> |

build 完成后 push 镜像 | false |

<pushImageTag>true</pushImageTag> |

build 完成后, push 指定 tag 的镜像, 配合 imageTags 使用 | false |

<retryPushCount>5</retryPushCount> |

push 镜像失败, 重试次数 | 5 |

<retryPushTimeout>10</retryPushTimeout> |

push 镜像失败, 重试时间 | 10s |

<rm>true</rm> |

build 时, 指定 –rm=true 即 build 完成后删除中间容器 | false |

<useGitCommitId>true</useGitCommitId> |

build 时, 使用最近的 git commit id 前7位作为tag, 例如: image:b50b604, 前提是不配置 newName | false |

更多参数可查看插件中的定义.

命令构建

如果<skipDockerPush>false</skipDockerPush>则install阶段将不提交Docker镜像, 只有maven的deploy阶段才提交.

1 | mvn clean install |

1 | [INFO] --- spring-boot-maven-plugin:1.5.9.RELEASE:repackage (default) @ eureka-center-server --- |

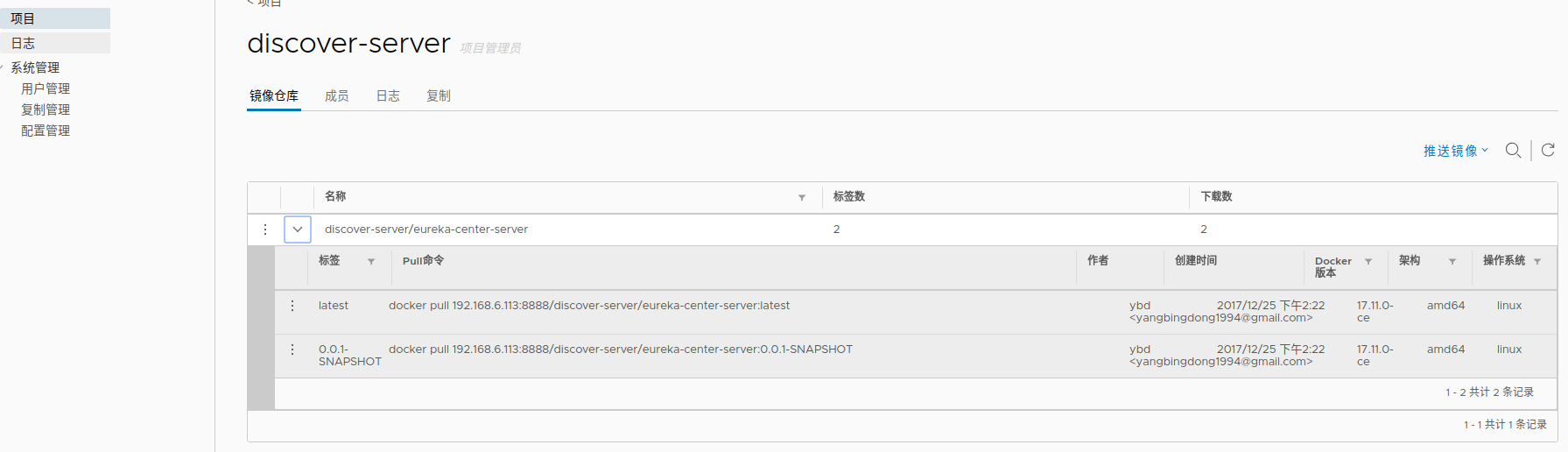

可以看到本地以及私有仓库都多了一个镜像:

此处有个疑问, 很明显看得出来这里上传了两个一样大小的包, 不知道是不是同一个jar包, 但id又不一样:

运行Docker

普通运行

运行程序

1 | docker run --name some-server -e ACTIVE=docker -p 8080:8080 -d [IMAGE] |

Docker Swarm 运行

docker-compose.yml 中的 image 通过 .env 配置, 但 通过 docker stack 启动并不会读取到 .env 的镜像变量, 但可以通过以下命令解决:

1 | export $(cat .env) && docker stack deploy -c docker-compose.yml demo-stack |

添加运行时JVM参数

只需要在Docker启动命令中加上-e "JAVA_OPTS=-Xmx128m"即可

其他的Docker构建工具

Jib

Jib 是 Google 开源的另外一款Docker打包工具.

jib-maven-plugin: https://github.com/GoogleContainerTools/jib/tree/master/jib-maven-plugin

pom配置:

1 | <plugin> |

更多配置请看官方文档.

Dockerfile Maven

这是 spotify 在 开源 docker-maven-plugin 之后的又一款插件, 用法大概如下:

1 | <plugin> |

感觉灵活性没有 docker-maven-plugin 好.

Docker Swarm环境下获取ClientIp

在Docker Swarm环境中, 服务中获取到的ClientIp永远是10.255.0.X这样的Ip, 搜索了一大圈, 最终的解决方安是通过Nginx转发中添加参数, 后端再获取.

在location中添加

1 | proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; |

后端获取第一个Ip.

服务注册IP问题

一般安装了 Docker 会出现多网卡的情况, 在服务注册的时候会出现获取到的ip不准确的问题, 可以通过以下几种方式解决(可以混合使用)

方式一, 忽略指定名称的网卡

1 | spring: |

方式二, 使用正则表达式, 指定使用的网络地址

1 | spring: |

方式三, 只使用站点本地地址

1 |

|

Demo地址

https://github.com/masteranthoneyd/spring-boot-learning/tree/master/spring-boot-docker

Kafka、ELK collect logs

传统的应用可以将日志存到日志中, 但集成Docker之后, 日志怎么处理?放到容器的某个目录然后挂在出来?这样也可以, 但这样就相当于给容器与外界绑定了一个状态, 弹性伸缩怎么办?个人还是觉得通过队列与ELK管理Docker日志比较合理, 而且Log4j2原生支持Kafka的Appender.

镜像准备

Docker Hub中的ELK镜像并不是最新版本的, 我们需要到官方的网站获取最新的镜像: https://www.docker.elastic.co

1 | docker pull zookeeper |

注意ELK版本最好保持一致

启动Kafka与Zookeeper

这里直接使用docker-compose(需要先创建外部网络):

1 | version: '3.4' |

KAFKA_ADVERTISED_HOST_NAME是内网IP, 本地调试用, Docker环境下换成kafka(与别名aliases的值保持一致), 其他Docker应用可通过kafka:9092这个域名访问到Kafka.

ELK配置以及启动

X-Pack 破解

复制Jar包

先启动一个Elasticsearch的容器, 将Jar包copy出来:

1 | export CONTAINER_NAME=elk_elk-elasticsearch_1 |

反编译并修改源码

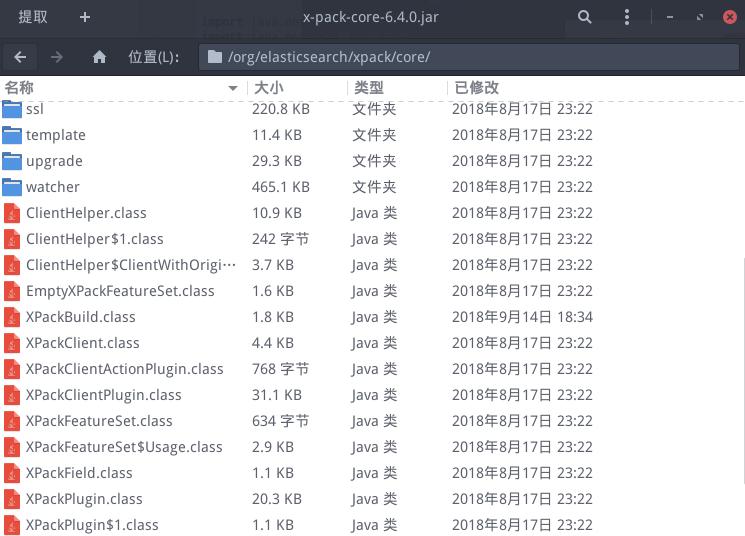

找到下面两个类:1

org.elasticsearch.license.LicenseVerifier.class org.elasticsearch.xpack.core.XPackBuild.class

使用 Luyten 进行反编译

将两个类复制IDEA(需要引入上面copy出来的lib以及x-pack-core-6.4.0.jar本身), 修改为如下样子:

1 | package org.elasticsearch.license; |

1 | package org.elasticsearch.xpack.core; |

再编译放回jar包中:

配置文件

Elasticsearch

elasticsearch.yml:

1 | cluster.name: "docker-cluster" |

Logstash

Kafka Input

logstash.conf 配置文件(注意下面的topics要与上面log4j2.xml中的一样):

1 | input { |

Filebeat Input

1 | input { |

logstash.yml:

1 | http.host: "0.0.0.0" |

User-Agent 分析配置:

1 | # 将 UA 输出到日志当中, 在 mutate 中添加: |

Kibana

kibana.yml:

1 | server.name: kibana |

Filebeat

这是另外一种基于文件的日志收集.

filebeat.yml:

1 | filebeat.inputs: |

申请License

转到 License申请地址 , 下载之后然后修改license中的type、max_nodes、expiry_date_in_millis:

1 | { |

启动ELK

在此之前, 官方提到了vm.max_map_count的值在生产环境最少要设置成262144, 设置的方式有两种:

永久性的修改, 在

/etc/sysctl.conf文件中添加一行:1

2

3grep vm.max_map_count /etc/sysctl.conf # 查找当前的值。

vm.max_map_count=262144 # 修改或者新增正在运行的机器:

1

sysctl -w vm.max_map_count=262144

docker-compose.yml:

1 | version: '3' |

启动后需要手动请求更新License:

1 | docker-compose up -d |

大概是下面这个样子:

1 | # ybd @ ybd-PC in ~/data/git-repo/bitbucket/ms-base/docker-compose/elk on git:master x [20:52:51] |

动态模板

我们可以自定义Logstash输出到ElasticSearch的Mapping.

logstash.conf 的 output 配置:

1 | output { |

配置模板:

logstash-template.json:

1 | { |

docker-compose.yml 中添加配置文件的映射:

1 | logstash: |

Kibana相关设置

显示所有插件

在Kibana首页最下面找到:

Discover每页显示行数

找到Advanced Setting

点进去找到 discover:sampleSize再点击Edit修改:

时区

Kibana默认读取浏览器时区, 可通过dateFormat:tz进行修改:

ElasticSearch UI

Spring Boot 集成 Elastic APM

运行APM Server

docker-compose:

1 | version: '3' |

apm-server.yml:

1 | apm-server: |

这个配置文件从容器中/usr/share/apm-server/apm-server.yml复制出来稍微改了一下Elasticsearch的Url.

若开启了X-Pack, 则需要在yml中配置帐号密码:

1 | output.elasticsearch: |

集成到Spring Boot

下载 APM代理依赖

在启动参数中添加:

1 | java -javaagent:/path/to/elastic-apm-agent-<version>.jar \ |

启动后在Kibana的APM模块中更新一下索引, 效果图大概是这样的:

log-pilot

Github: https://github.com/AliyunContainerService/log-pilot

更多说明: https://yq.aliyun.com/articles/69382

这个是Ali开源的日志收集组件, 通过中间件的方式部署, 自动监听其他容器的日志, 非常方便:

1 | docker run --rm -it -v /var/run/docker.sock:/var/run/docker.sock -v /etc/localtime:/etc/localtime -v /:/host -e PILOT_TYPE=fluentd -e FLUENTD_OUTPUT=elasticsearch -e ELASTICSEARCH_HOST=192.168.6.113 -e ELASTICSEARCH_PORT=9200 -e TZ=Asia/Chongqing --privileged registry.cn-hangzhou.aliyuncs.com/acs-sample/log-pilot:latest |

需要手机日志的容器:

1 | docker run --rm --label aliyun.logs.demo=stdout -p 8080:8080 192.168.0.202:8080/dev-images/demo:latest |

- 通过

--label aliyun.logs.demo=stdout告诉log-pilot需要收集日志, 索引为demo

然后打开Kibana就可以看到日志了.

问题:

- 日志稍微延迟

- 日志顺序混乱

- 异常堆栈不集中

Finally

参考:

https://www.yinchengli.com/2016/09/16/logstash/

https://www.jianshu.com/p/ba1aa0c52942